There is growing awareness worldwide around how AI application can impact the privacy concerns of citizens, organizations and governments. Privacy in AI relates to guarding sensitive information, gaining consent for use of that data, ensuring models do not divulge protected data, using models in a way that respects privacy, and meeting emerging laws and regulations around the world that encourage and mandate attention to privacy.

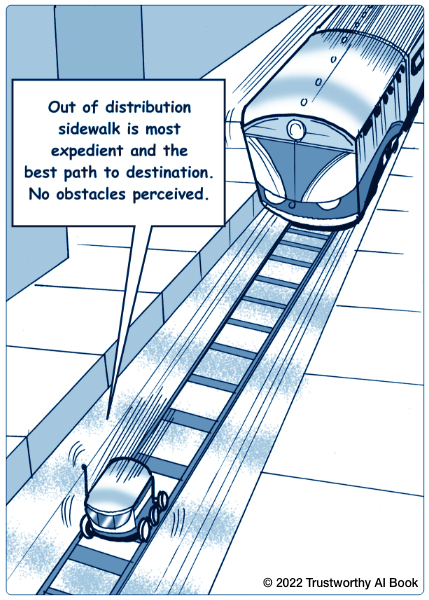

Individual control over personal data becomes impossible when an AI tool acts on group classifications, derives insights that have been accurately inferred but not acquired with consent, or when personal data is deduced via information that others in a social group have consented to share. Ultimately, the ethical debate over creating and using AI that is respectful of privacy comes down to data control and access such that its use does not create harm to the individual.

Yet, privacy considerations can be divergent across geographies and between organizations, and every company using AI must contend with the patchwork of privacy requirements and expectations in the places where they operate. For enterprises integrating AI systems throughout their operations, this raises essential considerations. Enterprises must understand what data is being collected and whether customers and others have consented to its collection and use. They need to create opportunities for consumers to opt in for data sharing, and if personal data is collected, the organization needs the capacity to obscure or hide the most sensitive information.