Despite their power and potential, AI tools are still just mathematical models. What happens when an AI makes a decision with a negative impact on an individual or organization? The model itself cannot face any real consequence. Instead, accountability for AI and responsibility for deciding when and how it should be used is a uniquely human ethical task.

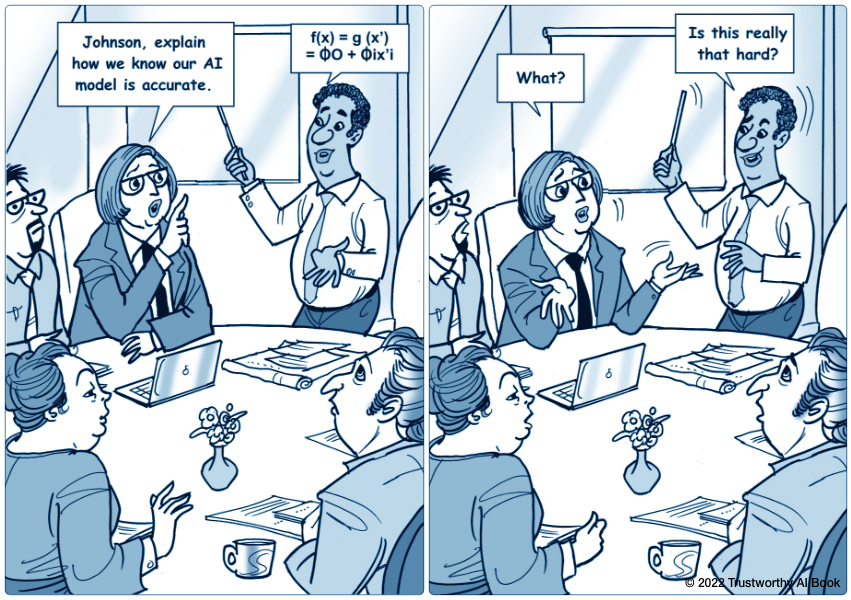

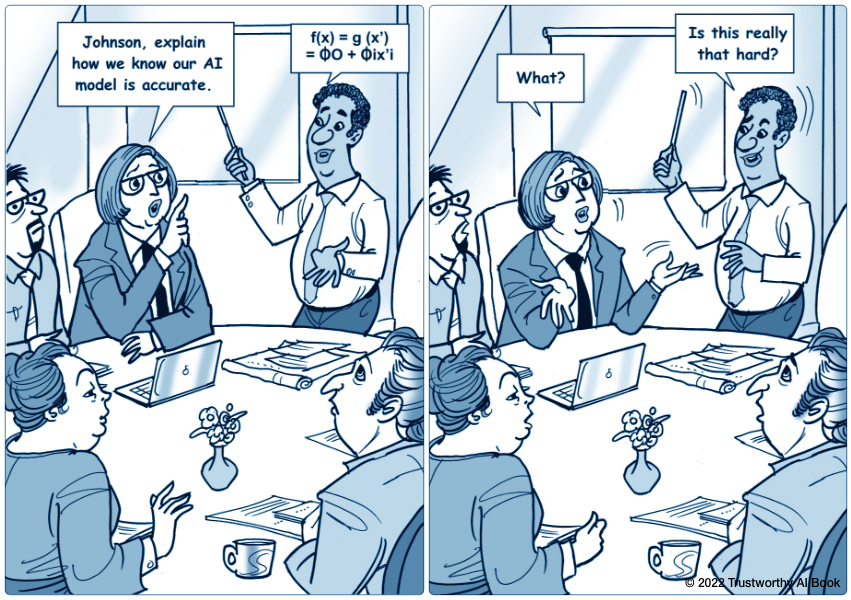

Accountability can be seen as a feature of the AI system, a determination of individual or group responsibility, and a quality of the social and technological systems in which the cognitive tools function. For AI, accountability means that not only can the system explain its decisions, the stakeholders who develop and use the system can also explain its decisions, their own decisions, and understand that they are accountable for those decisions.

A related but distinct dimension is responsibility, wherein stakeholders ask the essential questions of not just whether they can develop and deploy a given AI tool but whether they should do so. Determining if an AI application is a responsible choice is a whole-of-enterprise activity, involving all stakeholders from the executive suite to the most junior data scientist. It demands a shared sense of accountability and an acknowledgement that when working with AI, there is a necessary standard of care.