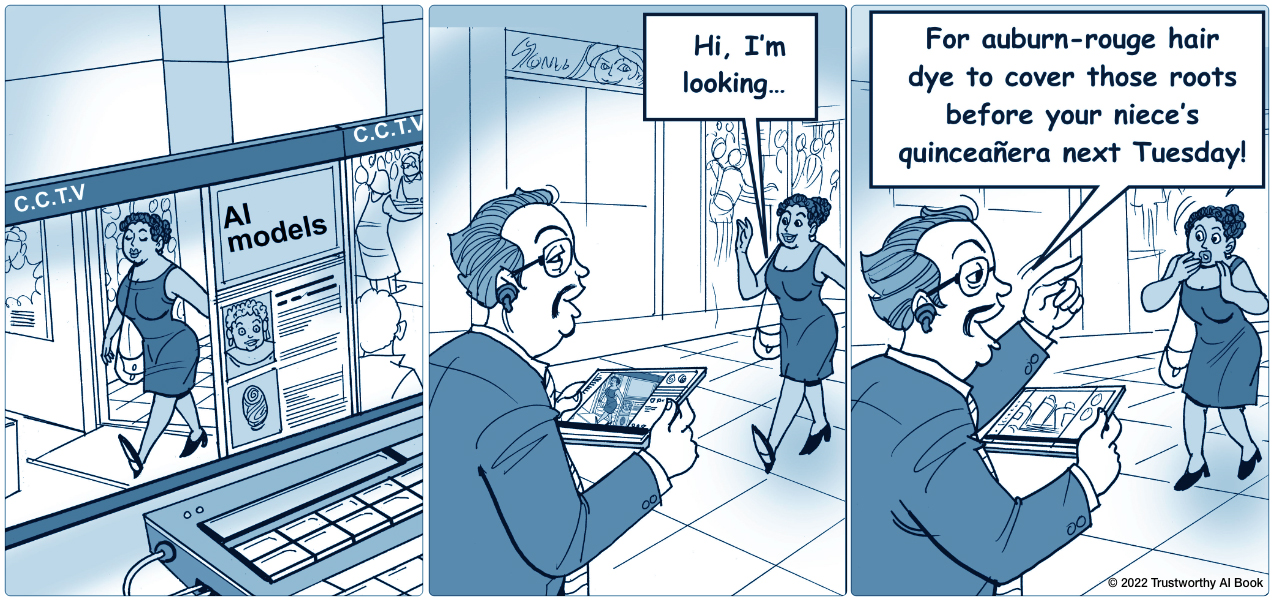

When AI outputs are inconsistently accurate, the result is uncertainty, and when models function as intended only within narrow and limited use cases, trustworthiness can suffer in other applications. Data scientists are challenged to build provably robust, consistently accurate AI models in the face of changing real-world data, and by this, two vital components of trustworthy AI are its robustness across applications and the reliability of its outputs.

In a robust model, the error rates across datasets are all nearly the same. In a reliable model, even when the AI encounters a novel or “out of distribution” input, it continues to deliver accurate outputs. When unexpected data is encountered in operation or when the model is successfully operating in less-than - ideal conditions, the robust and reliable AI tool continues to deliver.

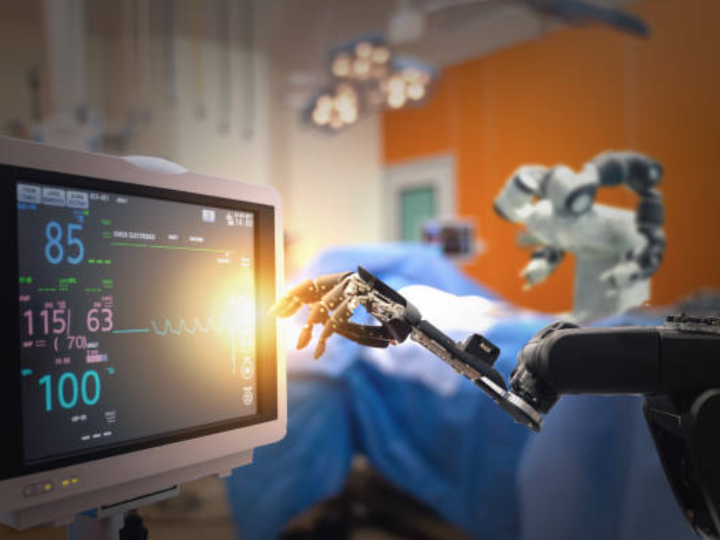

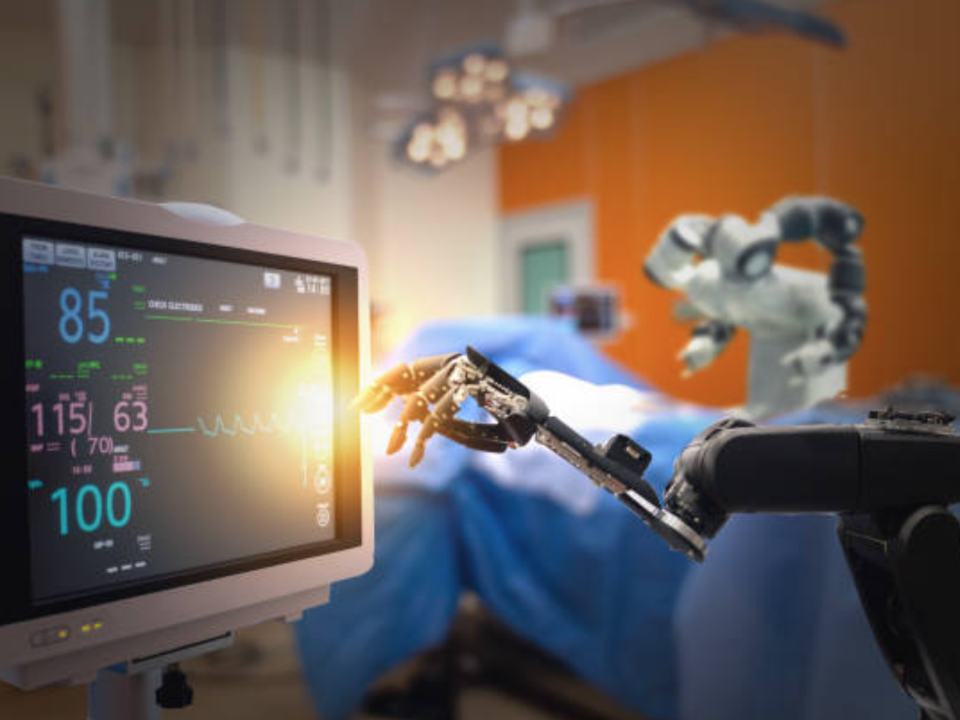

Achieving enduring robustness and reliability, however, can be a moving target. The quality of a model is only as good as the training and testing data used to develop it, and real-world operational data needs to be monitored for shifting trends and emerging data science needs. To be sure, in all AI, the higher value the use case, the greater the ramifications from its inaccuracy and/or failure.

Applications for supply chain management, employee scheduling, asset valuation, production errors, talent recruitment, customer engagement and much more can all deliver enormous value—or significant consequences—depending on the robustness and reliability of the model. The task for enterprises as they grow their AI footprint is to weigh these dimensions as a component of AI strategy and then align the processes, people, and technologies to manage and correct for errors in a dynamic environment.