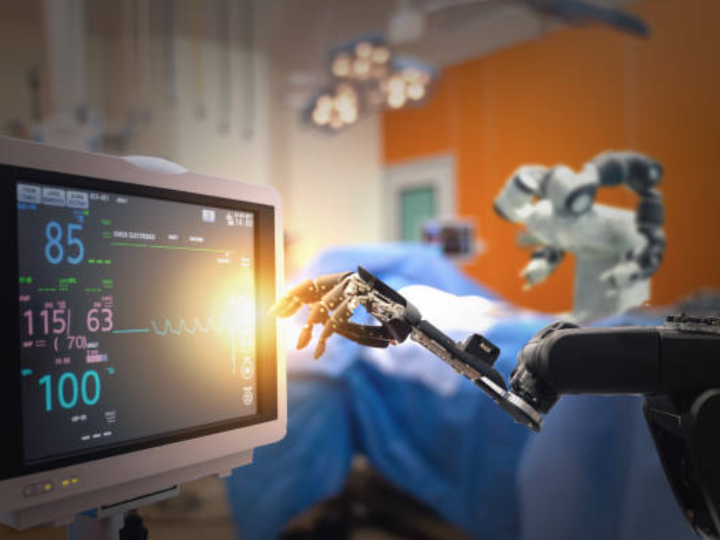

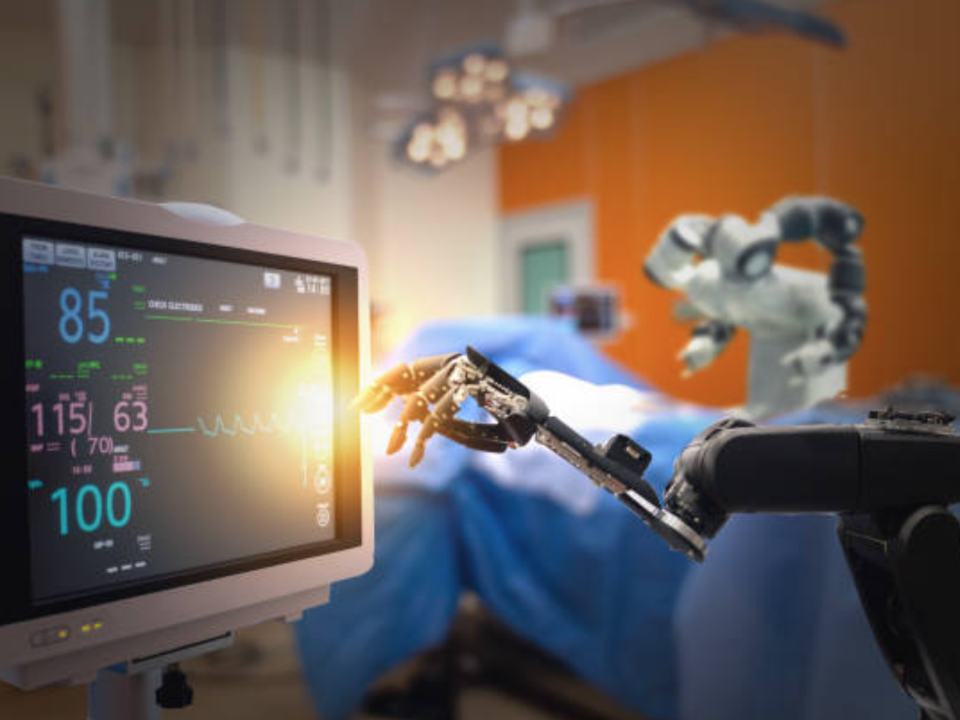

Our trust in powerful AI that can change every industry requires systems that can be secured against a variety of threats, many of which are not yet imagined, much less manifest. The path forward requires an awareness of how AI tools may become compromised, the implications from it, and plans and processes to keep security at the forefront of strategy and deployment throughout the AI lifecycle.

There are a variety of tactics cybercriminals might use to make an AI system operate counter to its intended design and training. New methods will surely arise and other tactics may be more effectively defeated as tools and security engineers work to keep models ahead of the threats. Including data poisoning, transfer learning attack, reverse engineering.

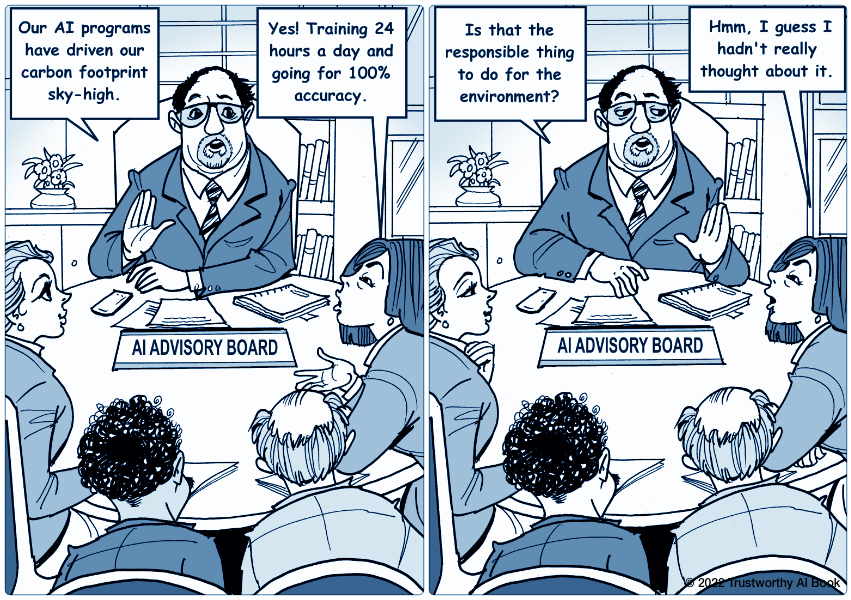

Meanwhile, what does it mean for something to be safe? Most simply, cause no harm. But what is harm? This is a murkier question. Harms span the physical, psychological, economic, environmental, and legal realms. AI does not know what a human is, much less harm, degrees of harm, and the moral tradeoff between an action and potentially negative consequences. Perhaps safety is not a single measure but instead a suite of ongoing activities and processes that touch every part of the AI lifecycle and all of the stakeholders in it