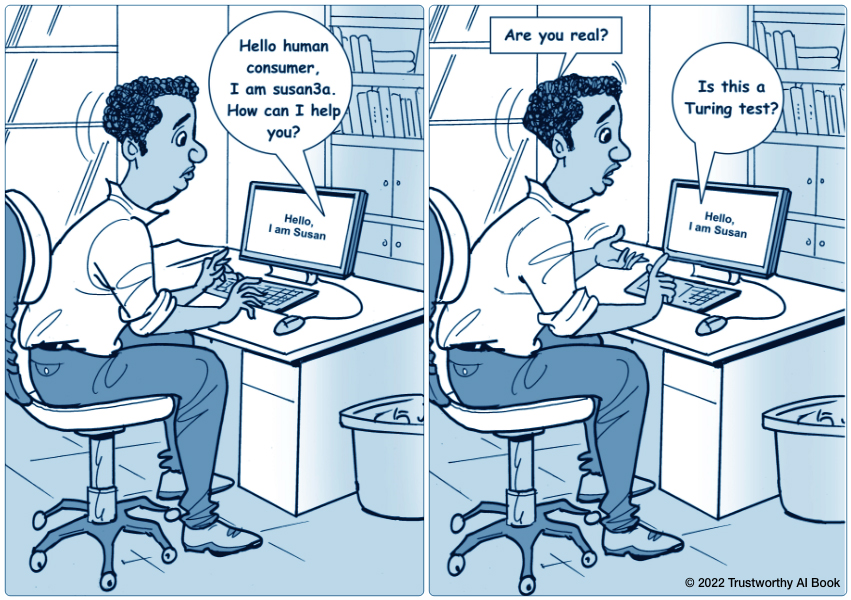

A cross-cutting dimension that impacts all other aspects of ethical AI is transparency. It permits accountability, motivates explainability, reveals bias, and encourages fairness. With sufficient transparency, datasets are understood, algorithms can be traced back to their training data, and deployed solutions are understood to be accurate (or not).

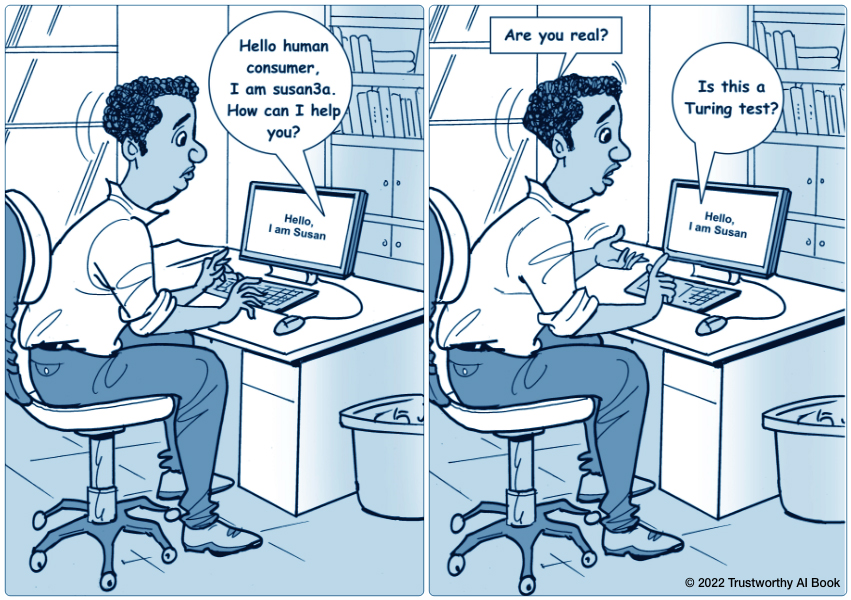

Transparency in AI is not a quality of a tool but the way in which an organization exchanges and promotes understanding of the system’s components and function among different stakeholders. With this, all parties have the necessary awareness and insight into AI systems to make informed choices as the system relates to their role, be it as an executive, a manager, or a consumer.

A component of a transparent system is explainability, which means it is possible to understand how an AI output was calculated. The more explainable the system, the greater the human understanding of the AI’s internal mechanics, and the better equipped an individual is to make informed choices in interacting with or applying the model. The challenge for every enterprise leader is to determine who requires explainability in AI function, which type, how that impacts the business, and how a transparent AI lifecycle can engender confidence and trust in cognitive tools.